For beginners in Machine Learning, it is quite interesting that there is something as aptly named as the Confusion Matrix. It really is quite difficult to interpret or understand what is going on when you encounter it for the first time in your journey but for every machine learning engineer, the confusion matrix is a fundamental tool to have in your toolkit.

The Confusion Matrix is a visualization tool for measuring the performance of a machine learning model. It can be used for measuring a number of things including Accuracy, Recall, Precision, Specificity and AUC-ROC curves. To know what metric to use while working on a problem is a different ballpark on its own.

In machine learning, there are different methods to use to solve different problems. All of them involve separating training and test data from your problem dataset to know if the model created is correct or not instead of just going in blindly. After the creation of these sub-datasets, the model is then created. A tool for testing how correct the model that has been created is for the dataset is a Confusion Matrix.

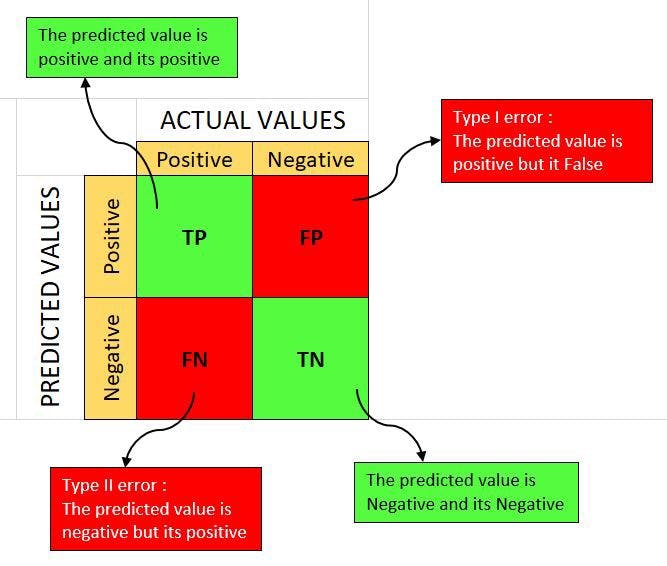

The rows in a Confusion Matrix signify the results that were predicted by the model while the columns stand for the truth that is known. This is where the concept of True Positives, True Negatives, False Positives and False Negatives come from.

These are explained properly:

- A true positive indicates that the prediction was positive and it is true (correct). It can also be explained by saying a true positive is an outcome where the model correctly predicts the positive class. e.g A woman is predicted to be pregnant and she is pregnant

- A true negative indicates when the prediction is negative and it is true (correct). It can also be understood as an outcome where a model correctly predicts a negative class. e.g A man is predicted to not be pregnant and he is not pregnant

- A false positive indicates that the prediction is positive and it is false (incorrect). It can also be interpreted as an outcome where the model incorrectly predicts the positive class e.g A man is predicted to be pregnant but he is not pregnant

- A false negative indicates that the prediction is negative but it is false (incorrect). It can also be understood as an outcome where the model incorrectly predicts the negative class. e.g A woman is predicted to be not pregnant but she is.

As seen from the diagram above, the True Positive is in the top left corner and the True Negative is in the bottom right corner. Those are largely the important figures and they exist in the left diagonal. The numbers not in the diagonal show how many samples were classified wrong by the algorithm. So, a confusion matrix done using two different models can show at face value which is worse in predicting results.

Not all confusion matrixes are 2*2, the size of a confusion matrix is determined by the number of things to be predicted. Something that is constant, no matter the size of the matrix is that the diagonal shows how correct the model predictions are.